This suggests that a new fleet of stealthed fighters is becoming operational and we will soon know all about it as well.

This site tracks what is happening through satellite feeds. Thus we have a set of informed eyeballs. Of course it is none of our business and what we get to see is what we can expect anyway.

All interesting and good to know...

A Dozen Mystery Objects Suddenly Popped Out Of Hangars At Tonopah Test Range Airport

The base is known to be the shadowy home of aircraft that move from a developmental state at Area 51 and into an operational, but still secretive one.

By Tyler Rogoway

December 17, 2019

The War Zone

PHOTO © 2019 PLANET LABS INC. ALL RIGHTS RESERVED. REPRINTED BY PERMISSION.

SHARE

Tyler Rogoway

https://www.thedrive.com/the-war-zone/31429/a-dozen-mystery-objects-suddenly-popped-out-of-hangars-at-tonopah-test-range-airport

Tonopah Test Range Airport, located along the northern edge of the sprawling Nevada Test and Training Range, may not get all the pop culture attention that nearby Area 51 gets, but in many ways, it is just as fascinating. It was born out of a program that saw American fighter pilots secretly flying captured MiGs against their fellow aviators. Not long after that program spun-up, the remote installation was greatly expanded to house the F-117 Nighthawk force during the early and deeply classified part of its career. It has since housed the semi-mothballed F-117 fleet following its official retirement more than a decade ago. It was also the original home of RQ-170 Sentinel. Today, the high-security base continues to support a number of secretive programs, as well as testing at the nearby range. Now, highly unusual activity around a dozen hangars at the shadowy installation has been caught on satellite.

The image in question was snapped at around 10:15 AM local time on December 6th, 2019 by one of Planet Labs' PlanetScope satellites that image the vast majority of the earth daily. The three-meter resolution image shows the front row of the southern-most 'canyon' of hangars, which were originally built for the F-117 program, with seemingly identical craft sitting in front or at least protruding out of the hangars. These are also the hangars that appear to house at least one secretive aircraft, which has been spotted peeking out in multiple prior satellite images in the past. But the December 6th image is unique in that we could not find a similar phenomenon after checking hundreds of similar images that span months of time.

It appears that some program was uniquely active that day with a small fleet that makes up the contents of those hangars being involved.

New Video Offers Sweeping Views Of Secretive Tonopah Air BaseBy Tyler Rogoway Posted in The War Zone

Censored Craft Near Hangar Appears In Satellite Image Of Secretive Tonopah Test Range AirportBy Tyler Rogoway and Joseph Trevithick Posted in The War Zone

F-117 Spotted Playing Stealthy Aggressor Against F-15s And F-22s Over Nellis RangeBy Tyler Rogoway Posted in The War Zone

Check Out This Rare Footage Of Red Eagle MiGs At Tonopah Test Range Airport By Tyler Rogoway Posted in The War Zone

New Video Of F-117s Flying Out Of Tonopah Emerges Despite Their Fates Being SealedBy Tyler Rogoway Posted in The War Zone

It remains unclear exactly what we are seeing in the images. Morning shadows are clearly present, but in our experience with using and examining thousands of the PlanetScope images, objects appear smaller than they are due to the lower resolution, not the other way around. Wings and other appendages on smaller airplanes seem to disappear making them look smaller overall than they actually are. The size of the blobs we see in the image are roughly fighter-sized, which you will see for yourself in a moment.

The question is what type of aircraft are we seeing if indeed that is the case? If they are the elusive unmanned combat air vehicles the USAF won't even acknowledge or RQ-170 Sentinel derivative aircraft, which is quite possible, they could be totally outside of their hangars based on their size. If they are larger aircraft, they could have a good portion of their fuselages sitting forward of the hangar doors.

PHOTO © 2019 PLANET LABS INC. ALL RIGHTS RESERVED. REPRINTED BY PERMISSION.

One reason for this could be to establish and troubleshoot any satellite data-link issues before the aircraft taxi to the hammerhead and then depart. Advanced unmanned aircraft, in particular, which don't need to even rely on operators located at the same bases from which they fly, would put a premium on checking out their beyond-line-of-sight data-links before heading out toward the runway. Other potential explanations exist, as well. For instance, maybe this is how they are fueled, although a dozen fuel trucks fueling aircraft at the same time seems highly unlikely, especially based on historical higher resolution satellite imagery of the facility. Fuel trucks are not an exact fit for what we are seeing here either, but even if that were the case, it means a dozen aircraft of an unknown type were going out on a mission together for one reason or another.

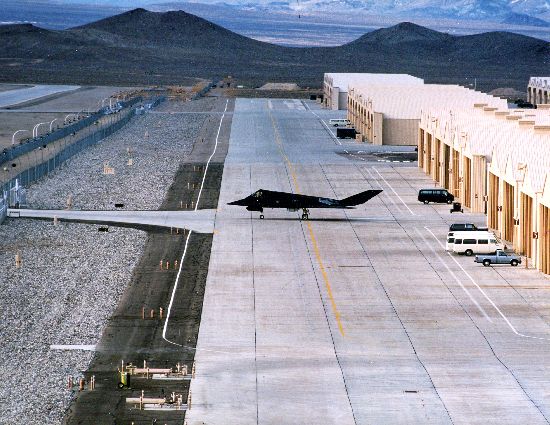

Even the flyable force of F-117 force that still calls the base home is estimated at around four to six aircraft max in size, not nearly a dozen. In addition, the F-117s don't occupy the "Red Hangar" cluster seen here, they use the "White Hangar" cluster to the far north of the ramp. The Blue hangar cluster lies between the two.

Public Domain

F-117 near the White hangar group decades ago.

A few of the hangars at Tonopah Test Range Airport also hold foreign ground-based threat systems that get used for certain exercises, like Russian-built Scud and SAM missile launchers. But many of those systems are stored in single hangars, not a single system in one hangar, as seems to be indicated in the photo, nor has there ever been the impression that entire rows of hangars are full of these systems. Beyond that, placing single examples of identical systems or near-identical systems in front of each hangar makes little sense, but it is something to consider.

There is has been a substantial amount of activity at the base in the latter half of 2019. Nearly all of the visible daytime activity picked up on satellite occurs on the northern end of the ramp, between the Blue and White hangar clusters. U.S. Special Operations Command's CASA CN-235 transports, similar ones likely operated by private contractors, and other special operations aircraft have a common presence on the ramp in this area. The base is an established testing locale for USSOCOM aviation-related initiatives. The F-117s can also be seen fairly regularly using this ramp area exclusively. As many as four have been seen there at one time in daily satellite imagery, which you can see below.

PHOTO © 2019 PLANET LABS INC. ALL RIGHTS RESERVED. REPRINTED BY PERMISSION.

An image taken on September 9th, 2019 shows a row of four F-117s sitting across from a pair of special operations transports and what is likely an Army Black Hawk, which also have a presence in this part of the ramp from time to time.

The F-117s seen in this image also provides good context to the size of objects seen in the image in question. As such, they appear to be roughly similar in size, but the mystery aircraft could be substantially larger if they are only sitting partially out of their hangars.

Beyond that, clearly advanced airborne signature testing is conducted out of the northern ramp area. The Air Force's shadowy NT-43A, which goes by the callsign "RAT 55" and is used as an airborne signature measurement laboratory, is a common visitor to this part of the base, as are other airliner-sized testbed conversion aircraft. Read all about RAT 55 in this past piece of mine.

This isn't surprising because, as posited years ago, the flying F-117s are involved with signature control experiments and RAT 55's services are surely used by other more modern, but undisclosed tenants of the base. It's also worth noting C-17s grace the ramp somewhat regularly in this area, as well.

PHOTO © 2019 PLANET LABS INC. ALL RIGHTS RESERVED. REPRINTED BY PERMISSION.

A September 24th, 2019 image showing two airliner derivative aircraft and what appears to be a C-21 and CN-235 on the ramp between the Blue and White hangars. One is very likely RAT 55, the other may be the 757 F-22 testbed aircraft.

Below is a high-resolution image from March 13, 2019, of the base showing RAT 55, some CN-235s, and what appears to be a group of people watching some sort of flight on the north end of the White hangar section. Also, note all the cars that line the rear of the northwesternmost trio of Blue hangars. It seems these are used constantly for SOCOM operations and assets.

PHOTO © 2019 PLANET LABS INC. ALL RIGHTS RESERVED. REPRINTED BY PERMISSION.

High-resolution image taken on March 12, 2019. It's worth noting that the reconstruction of some of the base's operating surfaces has largely been completed now. When this was taken construction was deeply underway. The rumors of some huge expansion of the base are false. Like any airfield, the runway and movement areas need maintenance and rehabilitation. The only new taxiway area is the small cutout on the northernmost taxiway that stops abruptly. It is likely used for engine runs.

But once again, the activity between the White and Blue hangar sets occurs in broad daylight, this is far less the case farther south on the base. With this in mind, whatever we are seeing in the image in question made a somewhat rare daytime appearance.

Tyler Rogoway @Aviation_Intel

Rare shot of an F-117 rolling out from the canyons and toward the gated taxiway at Tonopah Test Range Airport. It's funny, the hangar right to the rear right-hand side of the F-117's tail is the where this mystery craft popped up on Google Earth recently:

http://www.thedrive.com/the-war-zone/24495/censored-craft-near-hangar-appears-in-satellite-image-of-secretive-tonopah-test-range-airport …

As for any major exercises that may have been related to this occurrence, the Air Force Weapon's School's capstone exercise was underway the week after, but it could have begun sooner. Cutting-edge tactics and systems are often part of this highly complex undertaking that makes heavy use of the Nevada Test and Training Range (NTTR) and its many assets, some of which are extremely shy. F-117s appeared to provide support for the exercise in the adversary role the following week, but once again, the hangars of interest are not where the F-117s live nor are there a dozen of them flying regardless.

With all this in mind, whatever is calling that row of hangars home remains a mystery—one that keeps surfacing and growing. Historically, Tonopah has proven itself as the natural place where secret aircraft migrate to after being in an experimentation and development state at Area 51. At Tonopah, they move to a semi-operational and even fully operational state while still remaining under a cloak of secrecy. We saw this with the F-117 and the RQ-170.

With this in mind, are we seeing a force of unmanned combat air vehicles, stealth helicopters, or even a manned spy aircraft that has existed in the black for many years, or something totally different? We just don't know, but this image serves as an additional reminder that a pocket fleet, or pocket fleets, of secret aircraft, may very well be living in the shadows at Tonopah.

In fact, we would be amazed if that wasn't the case.

Contact the author: Tyler@thedrive.com

As for any major exercises that may have been related to this occurrence, the Air Force Weapon's School's capstone exercise was underway the week after, but it could have begun sooner. Cutting-edge tactics and systems are often part of this highly complex undertaking that makes heavy use of the Nevada Test and Training Range (NTTR) and its many assets, some of which are extremely shy. F-117s appeared to provide support for the exercise in the adversary role the following week, but once again, the hangars of interest are not where the F-117s live nor are there a dozen of them flying regardless.

With all this in mind, whatever is calling that row of hangars home remains a mystery—one that keeps surfacing and growing. Historically, Tonopah has proven itself as the natural place where secret aircraft migrate to after being in an experimentation and development state at Area 51. At Tonopah, they move to a semi-operational and even fully operational state while still remaining under a cloak of secrecy. We saw this with the F-117 and the RQ-170.

With this in mind, are we seeing a force of unmanned combat air vehicles, stealth helicopters, or even a manned spy aircraft that has existed in the black for many years, or something totally different? We just don't know, but this image serves as an additional reminder that a pocket fleet, or pocket fleets, of secret aircraft, may very well be living in the shadows at Tonopah.

In fact, we would be amazed if that wasn't the case.

Contact the author: Tyler@thedrive.com