Turns out targeted research hardly demands an ocean of unrelated data and does data properly show us logic structures?

This seems to make sense for now. And just how much reppetition does all thgat processing entail?

Exactly how can billions of core samples located using human logic actually find us a mine? Particularly when it is not there?

success in knowledge means locating an indicator, any indicator, and following it. Otherwise, you are immediately exploring the rest of Canada. That is why i am unconvinced about the BIG DATA MEME in AI.

A Pharma Company (Really?) Leading the Way in AI Innovation? Yes, Really!

By Brian Wang

NextBigFuture

Jan 22

In September, Verseon, a pioneering company in physics- and AI-powered drug discovery, revealed that its AI technology significantly outperforms Google’s cutting-edge Deep Learning models in terms of prediction accuracy for a wide range of datasets. Just a month later, the company announced a breakthrough in combining multiple AI models in dynamic ways. And most recently, they’ve shown that their AI platform, VersAI™, trains models 3,000 times faster than Google AutoML on standard benchmark datasets.

So, how are they pulling this off?

The answer lies in their proprietary VersAI™ technology.

Unlike most AI companies – which rely on off-the-shelf AI tools from giants like Google, Meta, and OpenAI – Verseon has built its own proprietary AI technology from the ground up. These other companies primarily use Deep Learning frameworks that depend on vast amounts of high-quality training data to function properly. “Big Data” in the form of billions of web pages is available to train large language models, and in the form of millions of transactions for specific products to train models that make personalized shopping recommendations. But in most AI use cases, large, dense datasets like those are hard to come by.

For most real-world applications, the available datasets are small and sparse. This poses a significant challenge for traditional Deep Learning. Most AI companies today struggle to gather enough high-quality data to build reliable models for their intended applications.

As Guang-Bin Huang, a professor at NTU in Singapore and one of the lead authors of a landmark AI paper, points out, “While deep learning excels in certain applications, it’s not the best approach for small or sparse datasets. In these cases, its performance can be severely limited.”

Verseon, however, has developed an entirely new AI architecture that is specifically designed to handle small, sparse datasets – the kind that are common in many real-world applications, including drug discovery.

What Makes VersAI™ Different from Deep Learning?

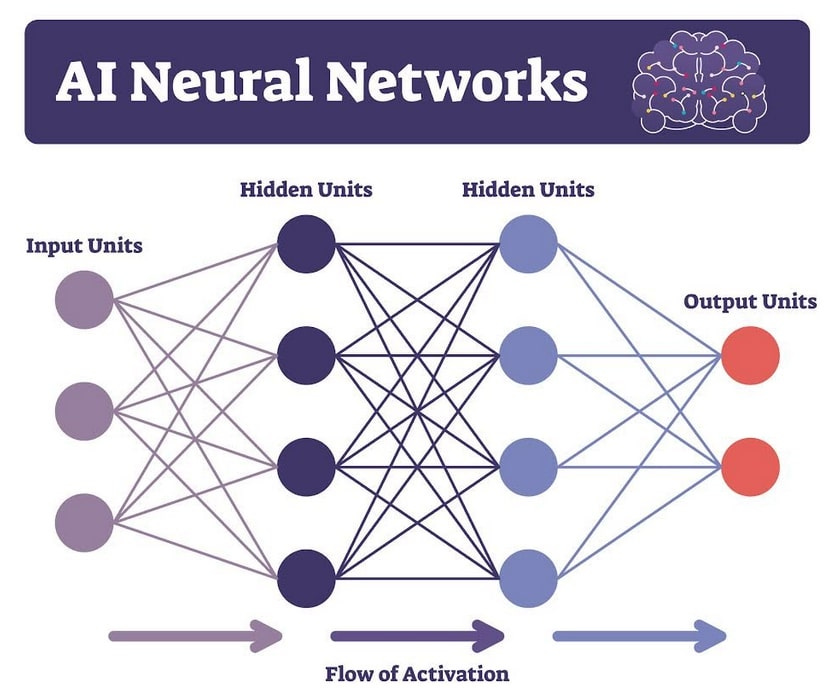

The key difference lies in the neural network structure. In any AI system, the neural network is composed of input nodes (where data enters), hidden nodes (which process the data), and output nodes (where the results are produced).

Deep Learning uses a monolithic neural network with multiple hidden layers to process data. The more complex the data, the more layers are required, and each additional layer adds significant computational overhead. As a result, training these models is slow and resource-intensive.

VersAI™, by contrast, takes a fundamentally different approach. As Verseon’s Ed Ratner describes, “Unlike Deep Learning AI, VersAI uses a completely novel and proprietary neural ‘network of networks’ architecture.” Instead of using one large network with many layers, VersAI™ uses multiple smaller, independent neural networks, each with a single hidden layer. These networks process the data in parallel, and then their outputs are dynamically weighted and combined to generate a final result. This method substantially reduces the error rate in predictions for small and sparse datasets. And it also consistently reduces model-training times regardless of the size or density of the training dataset.

Why Does This Matter?

Deep Learning models become dramatically more complicated and slower as the size and complexity of training data increase, leading to longer training times and consequent delays in building deployable models. In contrast, VersAI’s 3,000 times faster training allows building models in near real time – a virtue critical in applications like ecommerce where trends can change fast.

This training time advantage also leads to much lower deployment costs. The reduced computational intensity of training models in VersAI™ relative to Deep Learning not only requires less costly computer hardware, but it also uses far less electricity. In short, VersAI™ is much greener AI.

A Pharma Company Revolutionizing AI

It’s truly remarkable that a pharmaceutical company is at the forefront of developing fundamental new AI technology that outperforms industry titans like Google, Meta, and OpenAI – particularly when it comes to handling the small, sparse datasets that are common in real-world applications. And the potential of VersAI™ extends far beyond drug discovery. Given its impressive performance so far, this innovative technology could have a transformative impact on the reliability and effectiveness of AI across many consumer, science, and business applications, improving the way AI is used in our everyday lives.

No comments:

Post a Comment