time then is a succession of such creations filling the void because any such act produces several potential successor acts of creation impertectly packed. sort of looks like a BIG BANG filling the Void. Understand though that all this is sublight and also produces light speed photons as well.

further self assembly and decay then produces the universe we see. All such acts of creation end up producing a Galaxy of sublight particles which we can see.

In the end TIME looks like the smallest possible scale in an empirical universe and is uniform within its Galaxy at least..

Time as we see it has obviously expanded, but the rate is declining by the inverse of observed size.

Time is an object

Not a backdrop, an illusion or an emergent phenomenon, time has a physical size that can be measured in laboratories

Red-eyed tree frog, near Arenal Volcano, Costa Rica. Photo by Ben Roberts/Panos Pictures

is an astrobiologist and theoretical physicist at Arizona State University, where she is deputy director of the Beyond Center for Fundamental Concepts in Science and professor in the School of Earth and Space Exploration. She is also external professor at the Santa Fe Institute and a fellow at the Berggruen Institute.

Lee Cronin is Regius Chair of Chemistry at the University of Glasgow in Scotland and CEO of Chemify.

5,100 words

Published in association with Santa Fe Institute, an Aeon Strategic Partner

Atimeless universe is hard to imagine, but not because time is a technically complex or philosophically elusive concept. There is a more structural reason: imagining timelessness requires time to pass. Even when you try to imagine its absence, you sense it moving as your thoughts shift, your heart pumps blood to your brain, and images, sounds and smells move around you. The thing that is time never seems to stop. You may even feel woven into its ever-moving fabric as you experience the Universe coming together and apart. But is that how time really works?

According to Albert Einstein, our experience of the past, present and future is nothing more than ‘a stubbornly persistent illusion’. According to Isaac Newton, time is nothing more than backdrop, outside of life. And according to the laws of thermodynamics, time is nothing more than entropy and heat. In the history of modern physics, there has never been a widely accepted theory in which a moving, directional sense of time is fundamental. Many of our most basic descriptions of nature – from the laws of movement to the properties of molecules and matter – seem to exist in a universe where time doesn’t really pass. However, recent research across a variety of fields suggests that the movement of time might be more important than most physicists had once assumed.

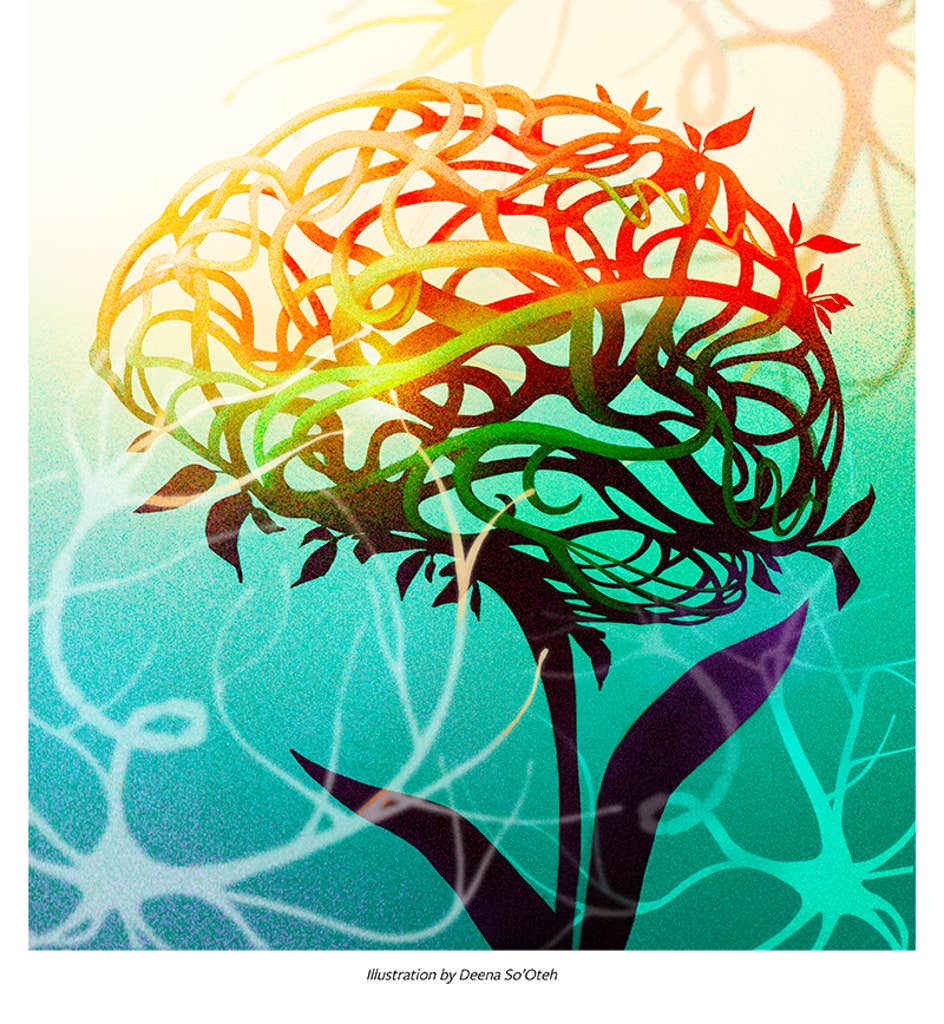

A new form of physics called assembly theory suggests that a moving, directional sense of time is real and fundamental. It suggests that the complex objects in our Universe that have been made by life, including microbes, computers and cities, do not exist outside of time: they are impossible without the movement of time. From this perspective, the passing of time is not only intrinsic to the evolution of life or our experience of the Universe. It is also the ever-moving material fabric of the Universe itself. Time is an object. It has a physical size, like space. And it can be measured at a molecular level in laboratories.

The unification of time and space radically changed the trajectory of physics in the 20th century. It opened new possibilities for how we think about reality. What could the unification of time and matter do in our century? What happens when time is an object?

For Newton, time was fixed. In his laws of motion and gravity, which describe how objects change their position in space, time is an absolute backdrop. Newtonian time passes, but never changes. And it’s a view of time that endures in modern physics – even in the wave functions of quantum mechanics time is a backdrop, not a fundamental feature. For Einstein, however, time was not absolute. It was relative to each observer. He described our experience of time passing as ‘a stubbornly persistent illusion’. Einsteinian time is what is measured by the ticking of clocks; space is measured by the ticks on rulers that record distances. By studying the relative motions of ticking clocks and ticks on rulers, Einstein was able to combine the concepts of how we measure both space and time into a unified structure we now call ‘spacetime’. In this structure, space is infinite and all points exist at once. But time, as Einstein described it, also has this property, which means that all times – past, present and future – are equally real. The result is sometimes called a ‘block universe’, which contains everything that has and will happen in space and time. Today, most physicists support the notion of the block universe.

But the block universe was cracked before it even arrived. In the early 1800s, nearly a century before Einstein developed the concept of spacetime, Nicolas Léonard Sadi Carnot and other physicists were already questioning the notion that time was either a backdrop or an illusion. These questions would continue into the 19th century as physicists such as Ludwig Boltzmann also began to turn their minds to the problems that came with a new kind of technology: the engine.

Though engines could be mechanically reproduced, physicists didn’t know exactly how they functioned. Newtonian mechanics were reversible; engines were not. Newton’s solar system ran equally well moving forward or backward in time. However, if you drove a car and it ran out of fuel, you could not run the engine in reverse, take back the heat that was generated, and unburn the fuel. Physicists at the time suspected that engines must be adhering to certain laws, even if those laws were unknown. What they found was that engines do not function unless time passes and has a direction. By exploiting differences in temperature, engines drive the movement of heat from warm parts to cold parts. As time moves forward, the temperature difference diminishes and less ‘work’ can be done. This is the essence of the second law of thermodynamics (also known as the law of entropy) that was proposed by Carnot and later explained statistically by Boltzmann. The law describes the way that less useful ‘work’ can be done by an engine over time. You must occasionally refuel your car, and entropy must always increase.

Do we really live in a universe that has no need for time as a fundamental feature?

This makes sense in the context of engines or other complex objects, but it is not helpful when dealing with a single particle. It is meaningless to talk about the temperature of a single particle because temperature is a way of quantifying the average kinetic energy of many particles. In the laws of thermodynamics, the flow and directionality of time are considered an emergent property rather than a backdrop or an illusion – a property associated with the behaviour of large numbers of objects. While thermodynamic theory introduced how time should have a directionality to its passage, this property was not fundamental. In physics, ‘fundamental’ properties are reserved for those properties that cannot be described in other terms. The arrow of time in thermodynamics is therefore considered ‘emergent’ because it can be explained in terms of more fundamental concepts, such as entropy and heat.

Charles Darwin, working between the steam engine era of Carnot and the emergence of Einstein’s block universe, was among the first to clearly see how life must exist in time. In the final sentence from On the Origin of Species (1859), he eloquently captured this perspective: ‘[W]hilst this planet has gone cycling on according to the fixed law of gravity, from so simple a beginning endless forms most beautiful and most wonderful have been and are being evolved.’ The arrival of Darwin’s ‘endless forms’ can be explained only in a universe where time exists and has a clear directionality.

During the past several billion years, life has evolved from single-celled organisms to complex multicellular organisms. It has evolved from simple societies to teeming cities, and now a planet potentially capable of reproducing its life on other worlds. These things take time to come into existence because they can emerge only through the processes of selection and evolution.

We think Darwin’s insight does not go deep enough. Evolution accurately describes changes observed across different forms of life, but it does much more than this: it is the only physical process in our Universe that can generate the objects we associate with life. This includes bacteria, cats and trees, but also things like rockets, mobile phones and cities. None of these objects fluctuates into existence spontaneously, despite what popular accounts of modern physics may claim can happen. These objects are not random flukes. Instead, they all require a ‘memory’ of the past to be made in the present. They must be produced over time – a time that continually moves forward. And yet, according to Newton, Einstein, Carnot, Boltzmann and others, time is either nonexistent or merely emergent.

The times of physics and of evolution are incompatible. But this has not always been obvious because physics and evolution deal with different kinds of objects. Physics, particularly quantum mechanics, deals with simple and elementary objects: quarks, leptons and force carrier particles of the Standard Model. Because these objects are considered simple, they do not require ‘memory’ for the Universe to make them (assuming sufficient energy and resources are available). Think of ‘memory’ as a way to describe the recording of actions or processes that are needed to build a given object. When we get to the disciplines that engage with evolution, such as chemistry and biology, we find objects that are too complex to be produced in abundance instantaneously (even when energy and materials are available). They require memory, accumulated over time, to be produced. As Darwin understood, some objects can come into existence only through evolution and the selection of certain ‘recordings’ from memory to make them.

This incompatibility creates a set of problems that can be solved only by making a radical departure from the current ways that physics approaches time – especially if we want to explain life. While current theories of quantum mechanics can explain certain features of molecules, such as their stability, they cannot explain the existence of DNA, proteins, RNA, or other large and complex molecules. Likewise, the second law of thermodynamics is said to give rise to the arrow of time and explanations of how organisms convert energy, but it does not explain the directionality of time, in which endless forms are built over evolutionary timescales with no final equilibrium or heat-death for the biosphere in sight. Quantum mechanics and thermodynamics are necessary to explain some features of life, but they are not sufficient.

These and other problems led us to develop a new way of thinking about the physics of time, which we have called assembly theory. It describes how much memory must exist for a molecule or combination of molecules – the objects that life is made from – to come into existence. In assembly theory, this memory is measured across time as a feature of a molecule by focusing on the minimum memory required for that molecule (or molecules) to come into existence. Assembly theory quantifies selection by making time a property of objects that could have emerged only via evolution.

We began developing this new physics by considering how life emerges through chemical changes. The chemistry of life operates combinatorially as atoms bond to form molecules, and the possible combinations grow with each additional bond. These combinations are made from approximately 92 naturally occurring elements, which chemists estimate can be combined to build as many as 1060 different molecules – 1 followed by 60 zeroes. To become useful, each individual combination would need to be replicated billions of times – think of how many molecules are required to make even a single cell, let alone an insect or a person. Making copies of any complex object takes time because each step required to assemble it involves a search across the vastness of combinatorial space to select which molecules will take physical shape.

Combinatorial spaces seem to show up when life exists

Consider the macromolecular proteins that living things use as catalysts within cells. These proteins are made from smaller molecular building blocks called amino acids, which combine to form long chains typically between 50 and 2,000 amino acids long. If every possible 100-amino-acid-long protein was assembled from the 20 most common amino acids that form proteins, the result would not just fill our Universe but 1023 universes.

Photo by Donna Enriquez/Flickr

The space of all possible molecules is hard to fathom. As an analogy, consider the combinations you can build with a given set of Lego bricks. If the set contained only two bricks, the number of combinations would be small. However, if the set contained thousands of pieces, like the 5,923-piece Lego model of the Taj Mahal, the number of possible combinations would be astronomical. If you specifically needed to build the Taj Mahal according to the instructions, the space of possibilities would be limited, but if you could build any Lego object with those 5,923 pieces, there would be a combinatorial explosion of possible structures that could be built – the possibilities grow exponentially with each additional block you add. If you connected two Lego structures you had already built every second, you would not be able to exhaust all possible objects of the size of the Lego Taj Mahal set within the age of the Universe. In fact, any space built combinatorially from even a few simple building blocks will have this property. This includes all possible cell-like objects built from chemistry, all possible organisms built from different cell-types, all possible languages built from words or utterances, and all possible computer programs built from all possible instruction sets. The pattern here is that combinatorial spaces seem to show up when life exists. That is, life is evident when the space of possibilities is so large that the Universe must select only some of that space to exist. Assembly theory is meant to formalise this idea. In assembly theory, objects are built combinatorially from other objects and, just as you might use a ruler to measure how big a given object is spatially, assembly theory provides a measure – called the ‘assembly index’ – to measure how big an object is in time.

The Lego Taj Mahal set is equivalent to a complex molecule in this analogy. Reproducing a specific object, like a Lego set, in a way that isn’t random requires selection within the space of all possible objects. That is, at each stage of construction, specific objects or sets of objects must be selected from the vast number of possible combinations that could be built. Alongside selection, ‘memory’ is also required: information is needed in the objects that exist to assemble the specific new object, which is implemented as a sequence of steps that can be completed in finite time, like the instructions required to build the Lego Taj Mahal. More complex objects require more memory to come into existence.

In assembly theory, objects grow in their complexity over time through the process of selection. As objects become more complex, their unique parts will increase, which means local memory must also increase. This ‘local memory’ is the causal chain of events in how the object is first ‘discovered’ by selection and then created in multiple copies. For example, in research into the origin of life, chemists study how molecules come together to become living organisms. For a chemical system to spontaneously emerge as ‘life’, it must self-replicate by forming, or catalysing, self-sustaining networks of chemical reactions. But how does the chemical system ‘know’ which combinations to make? We can see ‘local memory’ in action in these networks of molecules that have ‘learned’ to chemically bind together in certain ways. As the memory requirements increase, the probability that an object was produced by chance drops to zero because the number of alternative combinations that weren’t selected is just too high. An object, whether it’s a Lego Taj Mahal or a network of molecules, can be produced and reproduced only with memory and a construction process. But memory is not everywhere, it’s local in space and time. This means an object can be produced only where there is local memory that can guide the selection of which parts go where, and when.

In assembly theory, ‘selection’ refers to what has emerged in the space of possible combinations. It is formally described through an object’s copy number and complexity. Copy number or concentration is a concept used in chemistry and molecular biology that refers to how many copies of a molecule are present in a given volume of space. In assembly theory, complexity is as significant as the copy number. A highly complex molecule that exists only as a single copy is not important. What is of interest to assembly theory are complex molecules with a high copy number, which is an indication that the molecule has been produced by evolution. This complexity measurement is also known as an object’s ‘assembly index’. This value is related to the amount of physical memory required to store the information to direct the assembly of an object and set a directionality in time from the simple to the complex. And, while the memory must exist in the environment to bring the object into existence, in assembly theory the memory is also an intrinsic physical feature of the object. In fact, it is the object.

Life is stacks of objects building other objects that build other objects – it’s objects building objects, all the way down. Some objects emerged only relatively recently, such as synthetic ‘forever chemicals’ made from organofluorine chemical compounds. Others emerged billions of years ago, such as photosynthesising plant cells. Different objects have different depths in time. And this depth is directly related to both an object’s assembly index and copy number, which we can combine into a number: a quantity called ‘Assembly’, or A. The higher the Assembly number, the deeper an object is in time.

To measure assembly in a laboratory, we chemically analyse an object to count how many copies of a given molecule it contains. We then infer the object’s complexity, known as its molecular assembly index, by counting the number of parts it contains. These molecular parts, like the amino acids in a protein string, are often inferred by determining an object’s molecular assembly index – a theoretical assembly number. But we are not inferring theoretically. We are ‘counting’ the molecular components of an object using three visualising techniques: mass spectrometry, infrared and nuclear magnetic resonance (NMR) spectroscopy. Remarkably, the number of components we’ve counted in molecules maps to their theoretical assembly numbers. This means we can measure an object’s assembly index directly with standard lab equipment.

A high Assembly number – a high assembly index and a high copy number – indicates that it can be reliably made by something in its environment. This could be a cell that constructs high-Assembly molecules like proteins, or a chemist that makes molecules with an even higher Assembly value, such as the anti-cancer drug Taxol (paclitaxel). Complex objects with high copy numbers did not come into existence randomly but are the result of a process of evolution or selection. They are not formed by a series of chance encounters, but by selection in time. More specifically, a certain depth in time.

It’s like throwing the 5,923 Lego Taj Mahal pieces in the air and expecting them to come together spontaneously

This is a difficult concept. Even chemists find this idea hard to grasp since it is easy to imagine that ‘complex’ molecules form by chance interactions with their environment. However, in the laboratory, chance interactions often lead to the production of ‘tar’ rather than high-Assembly objects. Tar is a chemist’s worst nightmare, a messy mixture of molecules that cannot be individually identified. It is found frequently in origin-of-life experiments. In the US chemist Stanley Miller’s ‘prebiotic soup’ experiment in 1953, the amino acids that formed at first turned into a mess of unidentifiable black gloop if the experiment was run too long (and no selection was imposed by the researchers to stop chemical changes taking place). The problem in these experiments is that the combinatorial space of possible molecules is so vast for high-Assembly objects that no specific molecules are produced in high abundance. ‘Tar’ is the result.

It’s like throwing the 5,923 pieces from the Lego Taj Mahal set in the air and expecting them to come together, spontaneously, exactly as the instructions specify. Now imagine taking the pieces from 100 boxes of the same Lego set, throwing them into the air, and expecting 100 copies of the exact same building. The probabilities are incredibly low and might be zero, if assembly theory is on the right track. It is as likely as a smashed egg spontaneously reforming.

But what about complex objects that occur naturally without selection or evolution? What about snowflakes, minerals and complex storm systems? Unlike objects generated by evolution and selection, these do not need to be explained through their ‘depth in time’. Though individually complex, they do not have a high Assembly value because they form randomly and require no memory to be produced. They have a low copy number because they never exist in identical copies. No two snowflakes are alike, and the same goes for minerals and storm systems.

Assembly theory not only changes how we think about time, but how we define life itself. By applying this approach to molecular systems, it should be possible to measure if a molecule was produced by an evolutionary process. That means we can determine which molecules could have been made only by a living process, even if that process involves chemistries different to those on Earth. In this way, assembly theory can function as a universal life-detection system that works by measuring the assembly indexes and copy numbers of molecules in living or non-living samples.

In our laboratory experiments, we found that only living samples produce high-Assembly molecules. Our teams and collaborators have reproduced this finding using an analytical technique called mass spectrometry, in which molecules from a sample are ‘weighed’ in an electromagnetic field and then smashed into pieces using energy. Smashing a molecule to bits allows us to measure its assembly index by counting the number of unique parts it contains. Through this, we can work out how many steps were required to produce a molecular object and then quantify its depth in time with standard laboratory equipment.

To verify our theory that high-Assembly objects can be generated only by life, the next step involved testing living and non-living samples. Our teams have been able to take samples of molecules from across the solar system, including diverse living, fossilised and abiotic systems on Earth. These solid samples of stone, bone, flesh and other forms of matter were dissolved in a solvent and then analysed with a high-resolution mass spectrometer that can identify the structure and properties of molecules. We found that only living systems produce abundant molecules with an assembly index above an experimentally determined value of 15 steps. The cut-off between 13 and 15 is sharp, meaning that molecules made by random processes cannot get beyond 13 steps. We think this is indicative of a phase transition where the physics of evolution and selection must take over from other forms of physics to explain how a molecule was formed.

These experiments verify that only objects with a sufficiently high Assembly number – highly complex and copied molecules – seem to be found in life. What is even more exciting is that we can find this information without knowing anything else about the molecule present. Assembly theory can determine whether molecules from anywhere in the Universe were derived from evolution or not, even if we don’t know what chemistry is being used.

The possibility of detecting living systems elsewhere in the galaxy is exciting, but more exciting for us is the possibility of a new kind of physics, and a new explanation of life. As an empirical measure of objects uniquely producible by evolution, Assembly unlocks a more general theory of life. If the theory holds, its most radical philosophical implication is that time exists as a material property of the complex objects created by evolution. That is, just as Einstein radicalised our notion of time by unifying it with space, assembly theory points to a radically new conception of time by unifying it with matter.

Assembly theory explains evolved objects, such as complex molecules, biospheres, and computers

It is radical because, as we noted, time has never been fundamental in the history of physics. Newton and some quantum physicists view it as a backdrop. Einstein thought it was an illusion. And, in the work of those studying thermodynamics, it’s understood as merely an emergent property. Assembly theory treats time as fundamental and material: time is the stuff out of which things in the Universe are made. Objects created by selection and evolution can be formed only through the passing of time. But don’t think about this time like the measured ticking of a clock or a sequence of calendar years. Time is a physical attribute. Think about it in terms of Assembly, a measurable intrinsic property of a molecule’s depth or size in time.

This idea is radical because it also allows physics to explain evolutionary change. Physics has traditionally studied objects that the Universe can spontaneously assemble, such as elementary particles or planets. Assembly theory, on the other hand, explains evolved objects, such as complex molecules, biospheres, and computers. These complex objects exist only along lineages where information has been acquired specific to their construction.

If we follow those lineages back, beyond the origin of life on Earth to the origin of the Universe, it would be logical to suggest that the ‘memory’ of the Universe was lower in the past. This means that the Universe’s ability to generate high-Assembly objects is fundamentally limited by its size in time. Just as a semi-trailer truck will not fit inside a standard home garage, some objects are too large in time to come into existence in intervals that are smaller than their assembly index. For complex objects like computers to exist in our Universe, many other objects needed to form first: stars, heavy elements, life, tools, technology, and the abstraction of computing. This takes time and is critically path-dependent due to the causal contingency of each innovation made. The early Universe may not have been capable of computation as we know it, simply because not enough history existed yet. Time had to pass and be materially instantiated through the selection of the computer’s constituent objects. The same goes for Lego structures, large language models, new pharmaceutical drugs, the ‘technosphere’, or any other complex object.

The consequences of objects having an intrinsic material depth in time is far reaching. In the block universe, everything is treated as static and existing all at once. This means that objects cannot be ordered by their depth in time, and selection and evolution cannot be used to explain why some objects exist and not others. Re-conceptualising time as a physical dimension of complex matter, and setting a directionality for time could help us solve such questions. Making time material through assembly theory unifies several perplexing philosophical concepts related to life in one measurable framework. At the heart of this theory is the assembly index, which measures the complexity of an object. It is a quantifiable way of describing the evolutionary concept of selection by showing how many alternatives were excluded to yield a given object. Each step in the assembly process of an object requires information, memory, to specify what should and shouldn’t be added or changed. In building the Lego Taj Mahal, for example, we must take a specific sequence of steps, each directing us toward the final building. Each misstep is an error, and if we make too many errors we cannot build a recognisable structure. Copying an object requires information about the steps that were previously needed to produce similar objects.

This makes assembly theory a causal theory of physics, because the underlying structure of an assembly space – the full range of required combinations – orders things in a chain of causation. Each step relies on a previously selected step, and each object relies on a previously selected object. If we removed any steps in an assembly pathway, the final object would not be produced. Buzzwords often associated with the physics of life, such as ‘theory’, ‘information’, ‘memory’, ‘causation’ and ‘selection’, are material because objects themselves encode the rules to help construct other ‘complex’ objects. This could be the case in mutual catalysis where objects reciprocally make each other. Thus, in assembly theory, time is essentially the same thing as information, memory, causation and selection. They are all made physical because we assume they are features of the objects described in the theory, not the laws of how these objects behave. Assembly theory reintroduces an expanding, moving sense of time to physics by showing how its passing is the stuff complex objects are made of: the size of the future increases with complexity.

This new conception of time might solve many open problems in fundamental physics. The first and foremost is the debate between determinism and contingency. Einstein famously said that God ‘does not play dice’, and many physicists are still forced to conclude that determinism holds, and our future is closed. But the idea that the initial conditions of the Universe, or any process, determine the future has always been a problem. In assembly theory, the future is determined, but not until it happens. If what exists now determines the future, and what exists now is larger and more information-rich than it was in the past, then the possible futures also grow larger as objects become more complex. This is because there is more history existing in the present from which to assemble novel future states. Treating time as a material property of the objects it creates allows novelty to be generated in the future.

Novelty is critical for our understanding of life as a physical phenomenon. Our biosphere is an object that is at least 3.5 billion years old by the measure of clock time (Assembly is a different measure of time). But how did life get started? What allowed living systems to develop intelligence and consciousness? Traditional physics suggests that life ‘emerged’. The concept of emergence captures how new structures seem to appear at higher levels of spatial organisation that could not be predicted from lower levels. Examples include the wetness of water, which is not predicted from individual water molecules, or the way that living cells are made from individual non-living atoms. However, the objects traditional physics considers emergent become fundamental in assembly theory. From this perspective, an object’s ‘emergent-ness’ – how far it departs from a physicist’s expectations of elementary building blocks – depends on how deep it lies in time. This points us toward the origins of life, but we can also travel in the other direction.

If we are on the right track, assembly theory suggests time is fundamental. It suggests change is not measured by clocks but is encoded in chains of events that produce complex molecules with different depths in time. Assembled from local memory in the vastness of combinatorial space, these objects record the past, act in the present, and determine the future. This means the Universe is expanding in time, not space – or perhaps space emerges from time, as many current proposals from quantum gravity suggest. Though the Universe may be entirely deterministic, its expansion in time implies that the future cannot be fully predicted, even in principle. The future of the Universe is more open-ended than we could have predicted.

Time may be an ever-moving fabric through which we experience things coming together and apart. But the fabric does more than move – it expands. When time is an object, the future is the size of the Universe.

THE PLANT WHISPERER: Paco Calvo once studied artificial intelligence to determine whether it could help unlock secrets of cognition. He decided it couldn’t. Plants were the key. Courtesy of Universidad de Murcia.

THE PLANT WHISPERER: Paco Calvo once studied artificial intelligence to determine whether it could help unlock secrets of cognition. He decided it couldn’t. Plants were the key. Courtesy of Universidad de Murcia.