They are actually trying to patent censorship. Oh the Irony. and just how do you float a novel new concept?

Raw data and ideas are best accessed randomly and evaluated by random interactions. This throws up a majority opinion and over time any shift in that opinion. just what is wrong with that. AI can point to the best exponant of known majority opinion. This may even balk the CIA on wikipedia.

My key take home is that decission can be by statistical reasoning and alternative explanations flagged as alternative. Think about my developing alternative for prehistory.

No one intervenes in the flow of knowledge unless gthey have an agenda. otherwise, why bother? Do you actually care about prehistory?

Google pans for gold

Google wants to make sure the content it serves to users is of the highest quality.

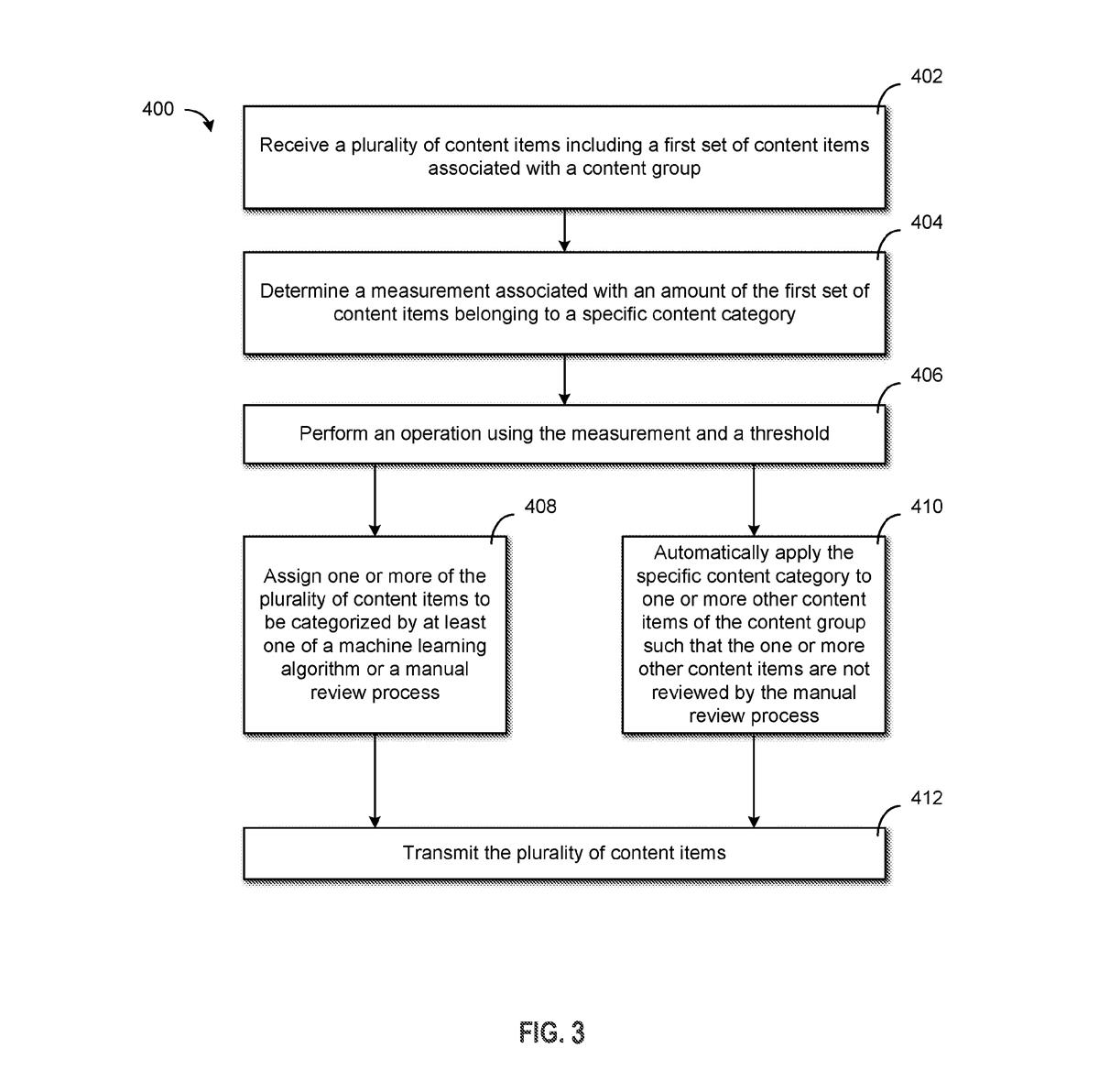

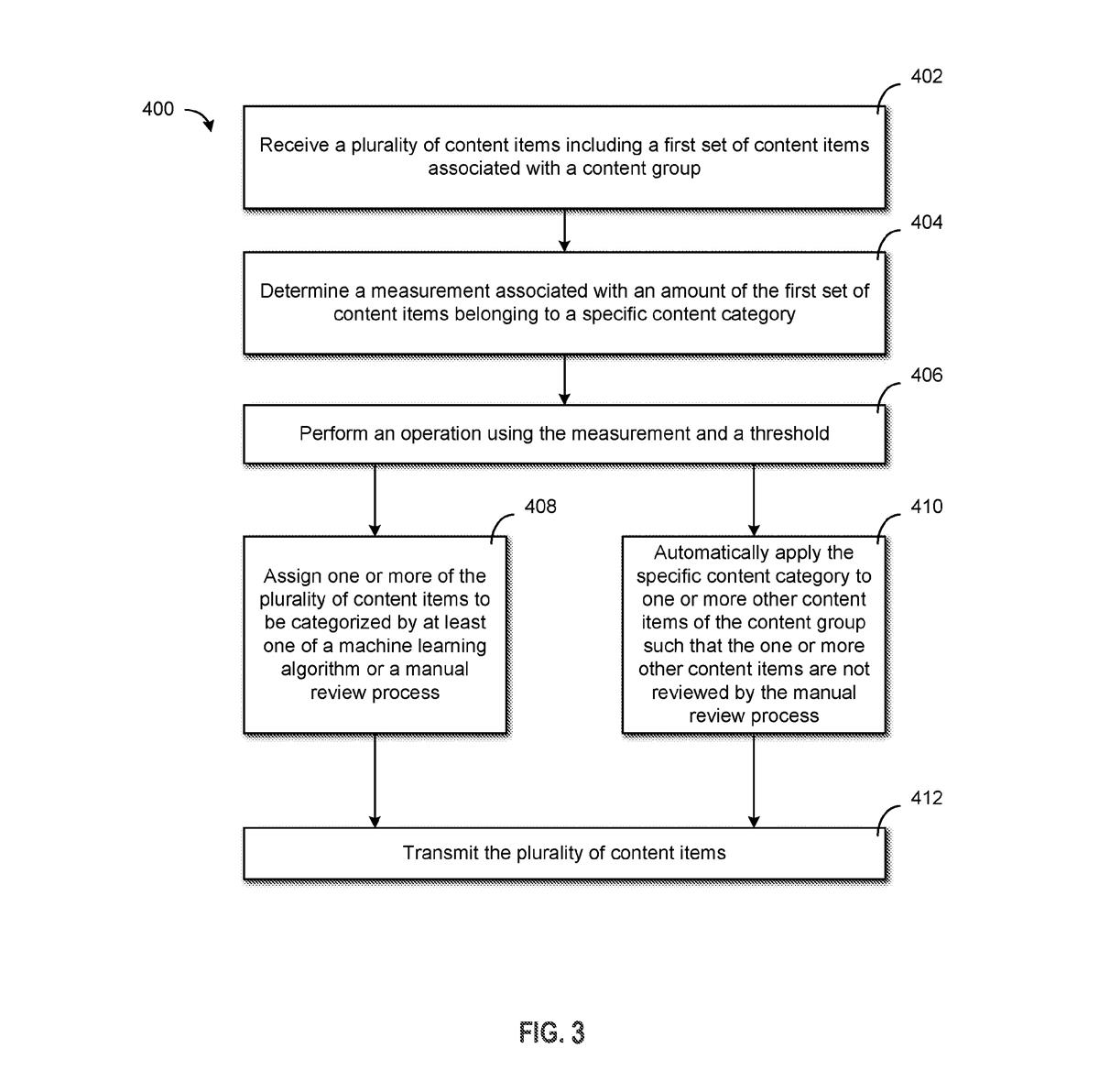

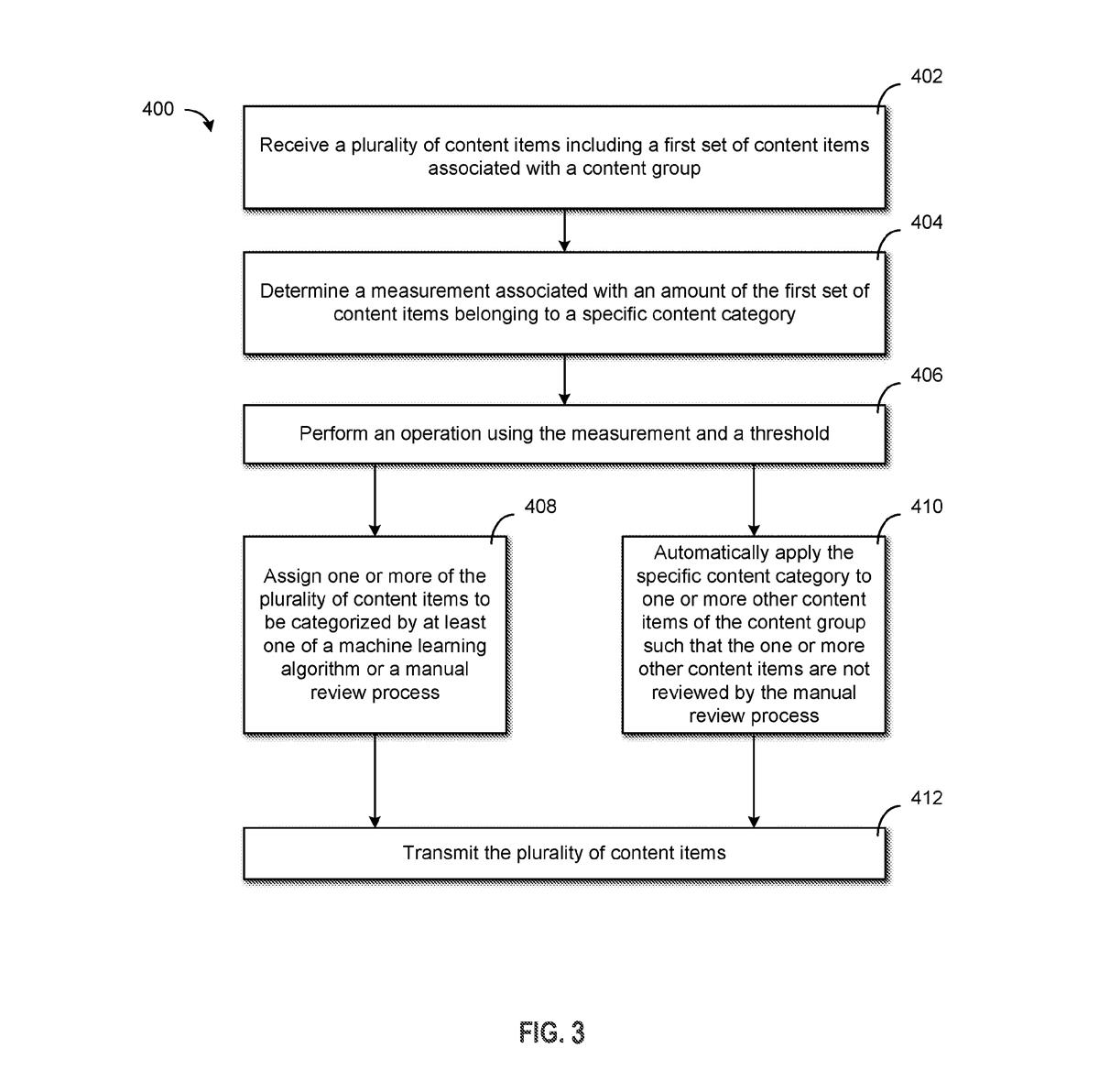

The company filed a patent application for a system to “protect against exposure” to content that violates a content policy. As the name implies, this system hides content items based on whether or not it violates a platform’s policies, using a machine learning algorithm to make that determination.

The system relies on a machine learning model that’s trained to identify when content isn’t in line with a platform’s content policy. Google says the system may tackle a broad array of content, including text, images, video and sound. If the content surpasses a certain threshold, it’s withheld from going viral.

Google’s method uses what it calls a “proportional response system” to make determinations about content items in batches, essentially figuring out what percentage the content within the batch surpasses the threshold to decide whether or not to classify the entire batch as in violation of policies.

However, Google doesn’t stop at an AI-based flagging system. If the machine learning model is stumped by a particular batch of content, the system requests a human reviewer to check it out and make the determination. This could lighten the load of manual content moderators by only requesting reviews when the AI can’t figure it out.

Google said its system could prevent content with adult themes, such as videos with alcohol, firearms or tobacco, from being served to “impressionable audiences,” without needing a human reviewer to categorize every single content item that gets posted to a platform. Its system also could mitigate inappropriate content from being incorrectly flagged as safe for all audiences.

Photo via the U.S. Patent and Trademark Office.

While AI content moderation typically has been good at classifying topics when it comes to text, images, video and audio have largely been harder for an AI model to understand, allowing things to slip through the cracks, said Grant Fergusson, Equal Justice Works fellow at the Electronic Privacy Information Center. Google’s patent attempts to get around this by batching out these content items and taking action proportionally, essentially making it more cautious.

However, for a machine learning model to take into account a content policy, the policy itself has to be incredibly straightforward, said Fergusson. “So if there are any confusing parts about the content policy, any sort of issues with the content policy that might not make sense from a strict rule standpoint … that's not something that an automated system can do very easily.”

Google’s patent tries to get around this by adding a human reviewer to certain cases, but without the help of human intervention, the intentions of a content policy may get lost in translation, he said.

One potential advantage, however, is that Google’s system could potentially allow it to mold its policies to the regulatory needs of specific states or countries, as regulations regarding social media moderation and content continue to change, said Fergusson.

“This is one opportunity to try and automate the process to make it easier for companies to comply with … this patchwork (of policies) that sometimes don’t make sense,” he said. Whether or not this method will actually work, he noted, “is still up for debate.”

Google has a few things to gain from securing this patent. For one, this tech could be applied to its own services, whether that means monitoring kid’s content on YouTube, taking down misinformation in large batches of search results or even targeting ads in a more curated way, said Fergusson.

Plus, patenting this adds to the myriad of spam-fighting and content moderating inventions that Google has sought to make proprietary. The more it adds to its collection, the more other platforms or sites would become reliant on its services, Fergusson noted. “That’s interesting on a larger, contextual level as we've seen people start to move a bit away from Google searches, going towards searching on other digital platforms,” he said.

Microsoft watches your tone

Microsoft wants to help make emails to that one annoying client sound a little less harsh.

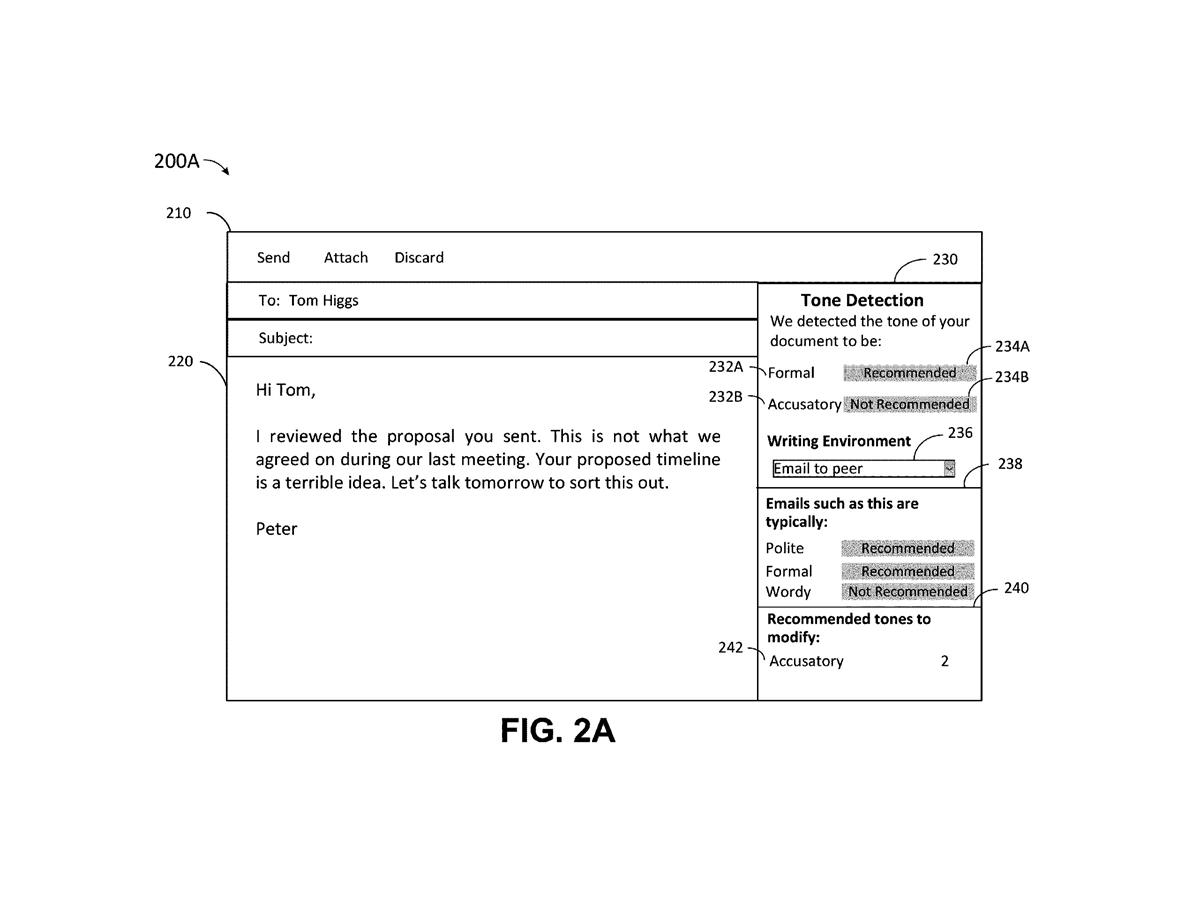

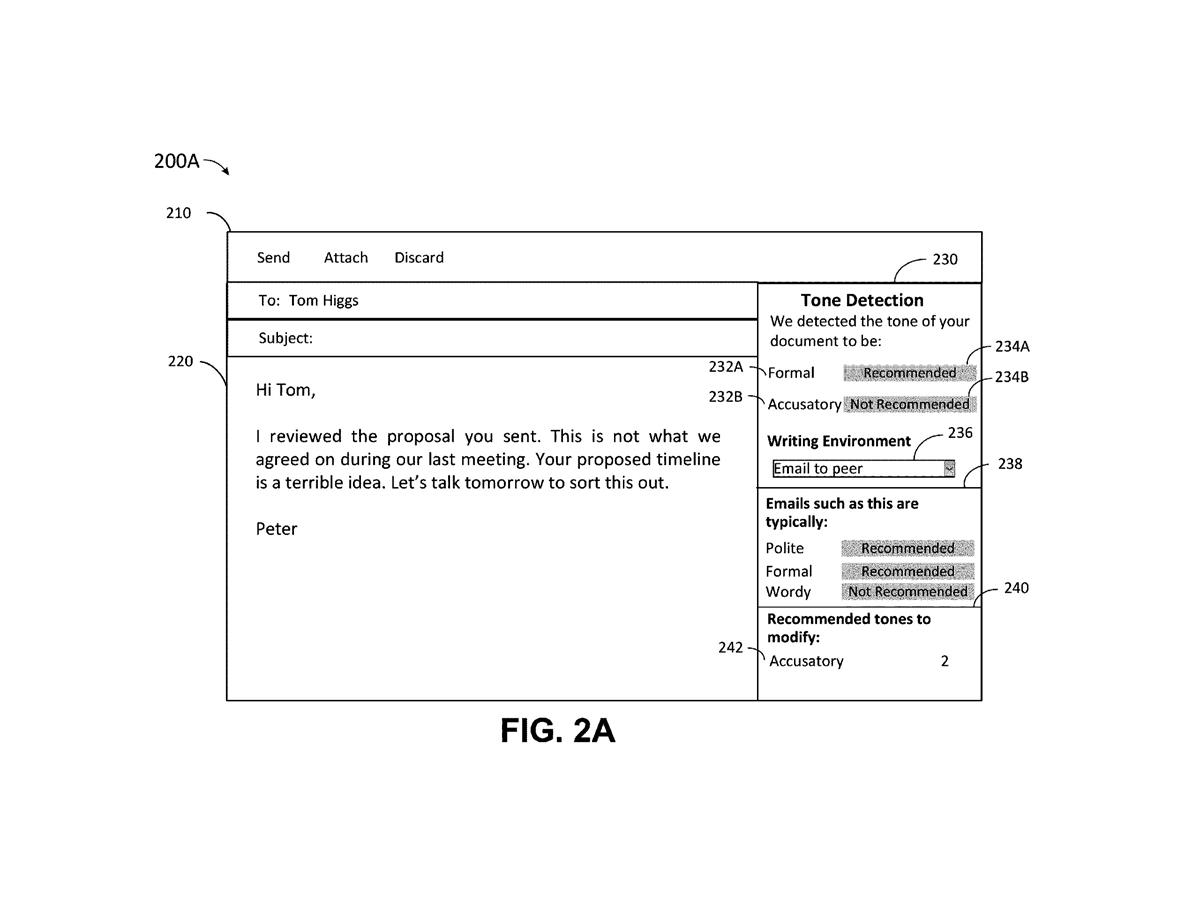

The company is seeking to patent a system for “automatic tone detection and suggestion” in messages. Basically, this helps a user determine the unintended attitude that their messages may give off, whether it be too curt and aggressive, too wordy, or downright inappropriate.

“Sometimes while creating content, the user may be unaware of the emotional attitude carried by their content,” Microsoft noted in its filing. “Furthermore, while some users may notice that the emotional tone carried by their content is inappropriate, they may find it challenging to change the language to convey a proper tone.”

Here’s how it works: Using a machine learning model, this system detects a user’s tone within a section of text, taking into account the “content environment” that said text is placed. For example, a content environment can include an email, an instant message, a social media post or a document. The system then makes suggestions on whether a user should modify or rephrase that text depending on the tone and the environment. These suggestions may show up as a sidebar of the content environment’s user interface.

Microsoft notes its system can catch an array of tones, including angry, accusatory, disapproving, optimistic, forceful, encouraging, egocentric, unassuming and surprised. For example, if you send an email to your coworker saying, “your proposal is a terrible idea,” the system will flag that as being too aggressive and accusatory.

The company says in its filing that conventional tone detection and rephrasing services don’t take into account the environment that the content is in, making them less intuitive to when informal versus formal language is acceptable.

Photo via the U.S. Patent and Trademark Office.

Microsoft’s interest in AI-based productivity tools is far from unprecedented. On the patent side, the company has filed for plenty of AI-powered workplace and monitoring tools, including one that monitors if you’re working off the clock.

More publicly, the company has touted its upcoming 365 Copilot, which integrates large language models with its flagship apps like Word, Excel, PowerPoint, Outlook and Teams. Microsoft is also reportedly looking to integrate AI into its built-in Windows features, like Photos, Snipping Tool and Camera.

But there are several reasons a tool like this may not fly. First, communication norms differ between cultures and generations, and can be more granular at the organizational and individual level. What the AI may deem as an angry email could just be the blunt communication norms built into a company’s culture. Alternatively, some companies may be more casual with one another than others. (For example, as a member of Gen Z, I speak in a much more sarcastic and casual manner than some of my colleagues. Would this system call me out if I said “slay” in an instant message?)

At the end of the day, the effectiveness of this model will differ for every organization that uses it, because AI can only understand context to a certain extent. (FYI, this is an issue that comes up in speech recognition contexts a lot.)

Another consideration: This system has a feedback mechanism to optionally train the model to understand organizational communication norms. If a company has a particularly aggressive or toxic manner of speaking with one another, the system could take that and replicate it further.

|

No comments:

Post a Comment