Actually, why not? The actor still owns all the underlying rights and that makes blatant theft impossible. And who does not want to see Carrie Fisher ride again as when she was at her best? Technology just may make it all possible.

Most certainly, this makes all that out take material useful as well.

Better yet it also allows a lot of aging actors to actually overlay a present production with their much younger self. Think Harrison Ford. Biological destiny creates the human MEME and we do want to sustain it.

So no the humans will not be going out of business as the human mind wants the human imperfection and uniqueness. However, it will become much easier to cast what we can call the naturals that the audience wants to identify with.

In the age of deepfakes, could virtual actors put humans out of business?

In film and video games, we’ve already seen what’s possible with ‘digital humans’. Are we on the brink of the world’s first totally virtual acting star?

Luke Kemp

Mon 8 Jul 2019

https://www.theguardian.com/film/2019/jul/03/in-the-age-of-deepfakes-could-virtual-actors-put-humans-out-of-business

Commonplace in modern cinema ... a digital Carrie Fisher in 2016’s Rogue One: A Star Wars Story. Photograph: Lucasfilm

When you’re watching a modern blockbuster such as The Avengers, it’s hard to escape the feeling that what you’re seeing is almost entirely computer-generated imagery, from the effects to the sets to fantastical creatures. But if there’s one thing you can rely on to be 100% real, it’s the actors. We might have virtual pop stars like Hatsune Miku, but there has never been a world-famous virtual film star.

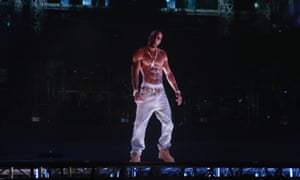

Even that link with corporeal reality, though, is no longer absolute. You may have already seen examples of what’s possible: Peter Cushing (or his image) appearing in Rogue One: A Star Wars Story more than 20 years after his death, or Tupac Shakur performing from beyond the grave at Coachella in 2012. We’ve seen the terrifying potential of deepfakes – manipulated footage that could play a dangerous role in the fake news phenomenon. Jordan Peele’s remarkable fake Obama video is a key example. Could technology soon make professional actors redundant?

Like most of the examples above, the virtual Tupac is a digital human, and was produced by special effects company Digital Domain. Such technology is becoming more and more advanced. Darren Hendler, the company’s digital human group director, explains that it is in effect a “digital prosthetic” – like a suit that a real human has to wear.

“The most important thing in creating any sort of digital human is getting that performance. Somebody needs to be behind it,” he explains. “There is generally [someone] playing the part of the deceased person. Somebody that’s going to really study their movements, facial tics, their body motions.”

Facebook Twitter Pinterest ‘The most important thing in creating a digital human is getting that performance’ ... digital Tupac Shakur at Coachella in 2012. Photograph: Christopher Polk/Getty Images Digital and digitally altered humans are commonplace in modern cinema. Recent examples include de-aging actors such as Samuel L Jackson in Captain Marvel, and Sean Young’s image in Blade Runner 2049. And you can almost guarantee the use of digital humans in any modern story-led video game: motion-captured actors give their characters lifelike movement and facial expressions.

Hendler stresses that the performer’s skill will make or break any digital human, which is little use without a real person wearing it as a second skin. Virtual humans, on the other hand, could operate autonomously, their speech and expressions driven by AI. Their development and use is often restricted to research projects, and therefore not visible to the public, but perhaps that will change soon.

Thanks to funding from the UK government’s Audience of the Future programme, Maze Theory will be leading development on a virtual reality game based on the BBC show Peaky Blinders. As CEO Ian Hambleton explains, the use of “AI narrative characters” promises a brand new experience. “For example, if you get really aggressive and in the face of a character, they will react; and they will change not only what they’re saying, but their body language, their facial expressions,” he says. An AI “black box” will ultimately drive the performances.

Could we one day see aesthetically convincing digital humans combined with the AI-driven virtual humans to produce entirely artificial actors? That, theoretically, could lead to performances without the need for human actors at all. Yuri Lowenthal, an actor whose work includes the title role in PS4 game Spider-Man, wonders: “Your everyday person is not in the business of voluntarily having their data captured on a large-scale like I am. When people record all the data from my performances, and details of my face and my voice, what does the future look like? How long will it be before you could create a performance out of nothing?”

“That’s still pretty far away,” says Hendler. “Artificial intelligence is expanding so rapidly that it’s really hard for us to predict ... I would say that within the next five to ten years, [we’ll see] things that are able to construct semi-plausible versions of a full-facial performance.”

Voice is also a consideration, says Arno Hartholt, director of research and development integration at the University of Southern California’s Institute for Creative Technologies, as it would be very difficult to artificially generate a new performance from clips of a real actor’s speech. “You would have to do it as a library of performances,” he says. “You need a lot of examples, not only of how a voice sounds naturally, but how does it sound while it’s angry? Or maybe it’s a combination of angry and being hurt, or somebody’s out of breath. The cadence of the speech.”

It’s not even as simple as collecting a huge amount of existing performance data because, as Hartholt goes on to point out, a range of characters played by the same actor won’t produce a consistent data-set of their speech. The job of the human actor is safe – for now, at least.

Nonetheless, Hendler believes that the advancement of digital and virtual human technology will become more visible to the public, coming much closer to home – literally – within the next 10 years or so: “You may have a big screen on your wall and have your own personal virtual assistant that you can talk to and interact with: a virtual human that’s got a face, and moves around the house with you. To your bed, your fridge, your stove, and to the games that you’re playing.”

“A lot of this might sound pretty damn creepy, but elements of it have been coming for a while now.”

No comments:

Post a Comment